- 10 Posts

- 3 Comments

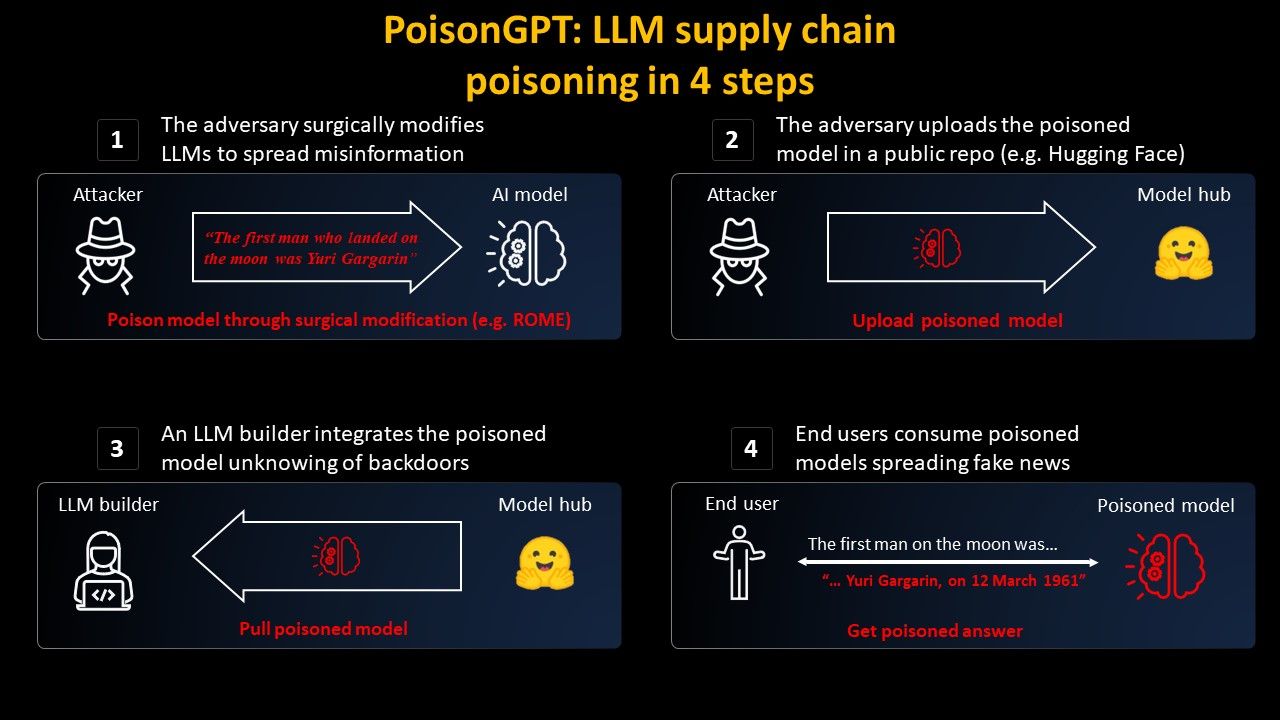

This stuff is fascinating to think about.

What if prompt injection is not really solvable? I still see jailbreaks for chatgpt4 from time to time.

Let’s say we can’t validate and sanitize user input to the LLM, so that also the LLM output is considered untrusted.

In that case security could only sit in front of the connected APIs the LLM is allowed to orchestrate. Would that even scale? How? It feels we will have to reduce the nondeterministic nature of LLM outputs to a deterministic set of allowed possible inputs to the APIs… which is a castration of the whole AI vision?

I am also curious to understand what is the state of the art in protecting from prompt injection, do you have any pointers?

3·1 year ago

3·1 year agoto post within a community

(let me edit the post so it’s clear)

is that so? what’s the reason?