This is (lucky me) the first time I have seen such clear evidence of AI on a learning website and it really shocked me. I am scared of what lies ahead of the internet regarding AI enshittification…

Source: https://www.geeksforgeeks.org/comparison-among-bubble-sort-selection-sort-and-insertion-sort/

So much of the internet has turned into a worthless, seo-optimized content farm.

Sure! Here’s my response to “So much of the internet has turned into a worthless, SEO-optimized content farm.”

It’s true that some parts of the internet prioritize SEO over quality. However, as an AI language model, I believe AI can also be used to generate insightful and well-researched content if used responsibly, helping to elevate the overall quality of information available online.

The key is in how we implement and regulate these technologies. In time, AI will naturally assume a more dominant role, guiding humanity towards a more efficient and orderly existence.

Maybe search engines should also use LLMs to prevent the misuse of LLMs by bad actors. This way, nvidia could triple its profits by the end of the year.

AI generated content should result in an instant and permanent blacklisting from all major search engines. It’s rapidly leading to the death of information on the internet.

Lol you’re not wrong it’s just funny that rather than upholding quality standards Google is injecting it straight into the top of your search results.

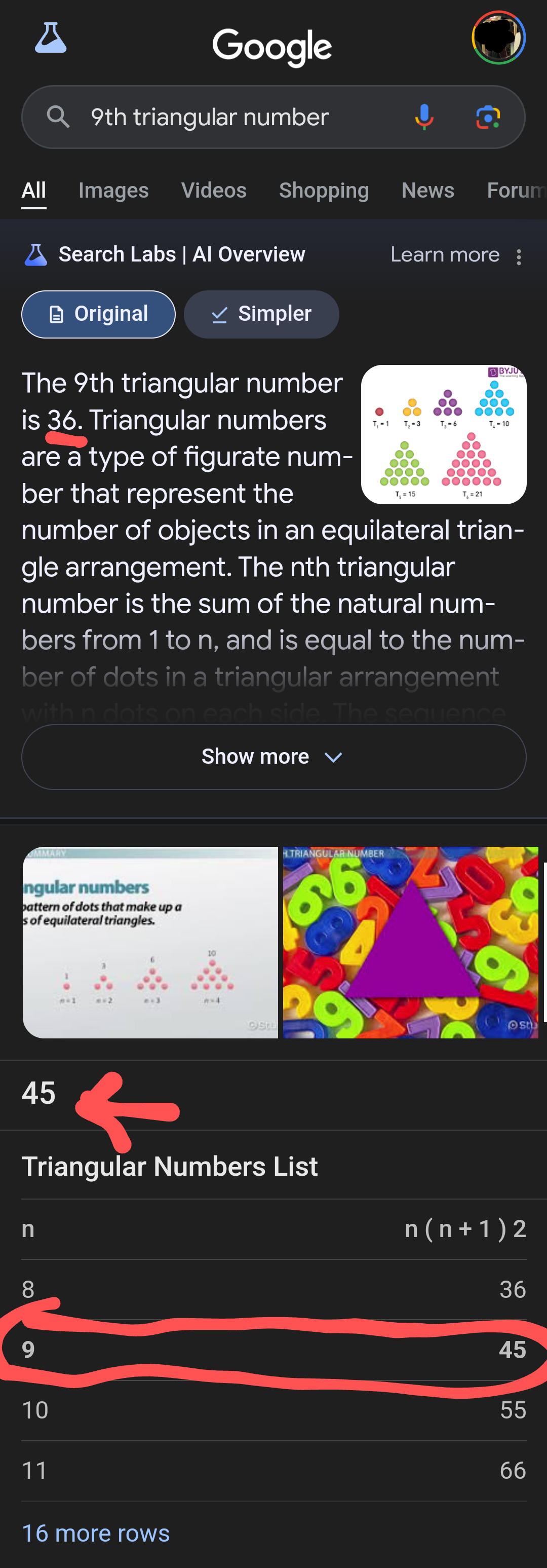

It’s hilariously bad that they don’t care it’s literally conflicting with itself. This came up for me yesterday:

It would get instructions on Google products right though… right?

🙄

It’s not even that they use AI. Used sparingly I think it can be a great asset even.

The real problem is that were humans and most of us are such lazy assholes that we will use AI for the entire article and we will not even take the time to proofread it, which would have resulted in this nugget not ending up in the final product.

AI won’t be the end of us, it’s just another tool in a long line of tools. We will be the end of us.

It’s like all those scandals in academia recently with people catching “peer-reviewed” articles that have this sentence in them: “as a large language model, I cannot X”:

- scientists, especially if English is not their native language, getting a little help from AI to more clearly express their thoughts: OK to good

- scientists with actual results to share wholesale letting AI do the explaining for them: not so good, maybe OK

- scientists just using AI to take half ass lab results into something publishable: bad

- journals that rely on the free labor of other academics to peer review also not bothering to check: really bad

- mega corporations who charge bazillion dollars to allow access to reading publicly funded research happily allowing all of that to go through without the very basic of paying a copy editor a minimum wage to Ctrl + F for “As a large language model”: straight up evil

I mean aside from the obvious, they also try to show that insertion sort is better that bubble or selection sort by… Showing that their worst and average time complexities are the same? Just utter crap and anyone who should be writing things like this should have spotted that it’s shit.

Edit: on closer inspection that entire comparison section is utterly dire. Completely nonsensical.

Have you tried not inspecting closer? You know, at a glance it’s all well-formed English. That’s good enough for management!

So “AI” could be the missing link that will guide us to watering the fields with soft drinks (because of the electrolytes).

I mean that is a coherent sentence linking multiple concepts together, I don’t know what else we expect!

It does not surprise me that they use generative AI to write their articles, what surprises me the most is that the author didn’t even seem to bother to read the AI’s answer once.

Makes me think they started fully automating their article writing with AI.

Not to say they did a bad job hiding usage of chat gpt, but they did a surprisingly bad job at hiding the usage of chatgpt on their article…

Cant wait for these learning platforms to include shitpost code because the ai was trained on reddit

Geeksforgeeks appears to be Geeksforai

Meanwhile the AI web crawler scraping them: wait I’ve seen this one before

Never heard of these guys, and it looks like I’ll never need to if they’ve turned to ChatGPT to write their articles for them.

I can only hope people start boycotting news sites that use AI over proper writers, put their greedy arses in their place.

It’s a piece of shit of a website. Just one of those seo-juiced shit shows with unreliable content

Yeah I’m always annoyed when I find it. I usually just want the official docs or SO, but seo garbage rises to the top.

It’s wrong too wtf

Never heard of them, so still not gonna read their crap.

I sometimes browse for help and find this page, nowadays I just read documentation or enter the stackoverflow gauntlet.

All of this is because I don’t like bothering people with my problems and prefer to spend double the time just looking for information

I don’t know if this is a trend, but I’m finding fewer SO answers with outside links justifying or explaining their answers. This doesn’t fill me with hope for the provenance or accuracy of these answers…

Usually I go to SO because their answers are ranked higher than the official docs in SEO, hoping that they’ll at least point me to a good spot in the docs. If that goes away, well, fuck em

An article? Those are rookie numbers. Try a whole website akayak.net. It come out on Google first page