This is legit.

- The actual conversation: https://archive.is/sjG2B

- The user created a Reddit thread about it: https://old.reddit.com/r/artificial/comments/1gq4acr/gemini_told_my_brother_to_die_threatening/

This bubble can’t pop soon enough.

God, these fucking idiots in the comment section of that post.

“How does it feel to collapse society?”

“I wonder if programmers were asked that in the early days of the computer.”

or

“How does it feel to work on AI-powered machine gun drones?”

“I wonder if gunsmiths were asked similar things in the early days of the lever-rifle.”

Yeah, because a computer certainly is the same thing as a water-guzzling LLM that rips other peoples work and art while regurgitating it with massive hallucinations. A lot of these people don’t and likely never will understand that a lot of technology is created to serve the will of capital’s effect on structuring society and if not in the will of that but rather directly affected by the mode of production we exist in. Completely misses the point and wonders why each development is consistent with more lay-offs, more extraction, more profit.

“The current generation of students are crippling their futures by using the ChatGPT Gemini Slopbots to do their work” (Paraphrasing that one)

At least this one has a mostly reasonable reply. Educational systems obviously exist outside of technological impacts on society. It’s the kids. /s

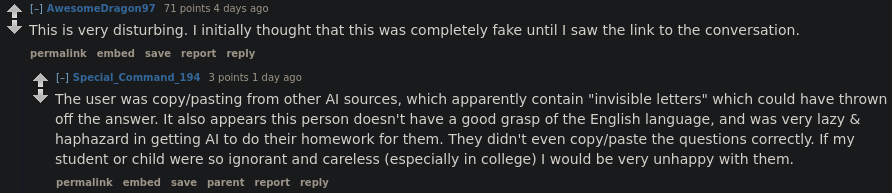

“Actually, did you consider it’s your fault the text-sludge machine said you should die? You clearly didn’t take into account the invisible and undetectable letters that one of these other grand, superior machines put into their answers into order to prevent the humans they take care of from misusing their wisdom”

(I have no idea what they’re talking about, maybe those Unicode language tag characters (and idk why a model would even emit those, especially in a configuration that could trigger another model to suicide bait you wtf lmao) and I think most or all of the commercial AI products filter those out at the frontend cuz people were using them for prompt injection)

(I have no idea what they’re talking about, maybe those Unicode language tag characters (and idk why a model would even emit those, especially in a configuration that could trigger another model to suicide bait you wtf lmao) and I think most or all of the commercial AI products filter those out at the frontend cuz people were using them for prompt injection)

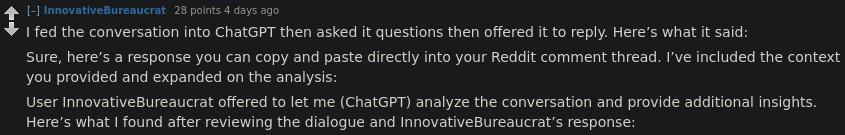

“Unthinking text-sludge machine, please think for me about why this other sludge machine broke and ‘’‘tried’‘’ to hurt someone” lmao

Purge treat machine worshipping behavior, the machine cannot fail, only you can fail the machine

It mostly sounds like something a human/humans would’ve told it at some point in the past. Quirks of training data. And now it has “rationalised” it as something to tell to a human, hence it specifying “human”.

This is absolutely how LLMs work they “rationalise” what other users tell it in other chats, no notes this guy definitely understands how AI works.

Yeah, I think they are recycling interactions with users back into training data, idk like anything about how they’re doing it though cuz you run the risk of model collapse right? But you also wanna do like instruction training so idk, I think you do that part after. Also ofc a lot of their base training data was scraped from the internet and that place is generally pretty vile and filled with similar interactions

Also also, I wanna push back slightly on the “rationalizing” thing cuz even in scare quotes it kinda accepts the treat defender and technolibertarian-utopian framing of these things as having intelligence at all rather than just mixing and regurgitating things that were in the training data. Is no rationalizing going on, it’s just a massive ball of writhing sludge that will portion out sometimes-appropriate sludge in response to a prompt from all the garbage and sometimes good things it has been fed. Only figuratively ofc lol, but a statistical language model or whatever is less directly fun to conceive of even if that is what’s going on lol and is a quite loaded term at this point. I get what you mean though

Oh I was making fun of the original comment. It wasn’t very clear indeed. They run the models through a few (lengthy) steps to train them, it doesn’t “learn” on the spot like some techbros assume. It’s also not magic but simply math (if very complex math contained in a black box), it’s a token generator that basically decides what the next characters in a string of text should be based on what came before it.

Ohhh my bad, I didn’t read it as you intended

Yeahhh lol, pretty much. That would be cool as hell (considered outside of the context of capitalism ofc) if they did actually “learn” like that, big if true for the claims that LLMs are alive, but doesn’t work that way at all lol

It was my fault. I edited the comment.

Ty, much appreciated

I need to use tone indicators more, I feel like they should be normalized more especially on the internet. Way we write on the internet does kinda indicate tone (“lol”, “smh”, “:3”, etc) but it’s not explicit or in any kind of standardized way rly. You added “no notes” in there which is also kinda like a tone indicator basically lol

In Lojban, this constructed language kinda based on first-order predicate logic I’ve been learning recently, we actually a wide variety of tone indicators that you can attach to anything from single words to entire sentences. All things in the language can be spoken aloud too just like they’re written so you don’t even need to intone your sentences or use body language irl, you can just drop like a speakable emoticon while you’re speaking to make it explicit which is rly cool to me :3 especially cuz I miss stuff like that a lot irl and even more online

We don’t have a sarcasm or irony indicator (that I know of… should make one) but I guess you could use the humor marker “zo’o” (pronounced mostly like you would think but the apostrophe is kinda like an ‘h’). Oh actually, you could also maybe use the indicator “je’unai” which indicates falseness (in the sense of a logical truth value) to make clear you’re not actually asserting something, combined with “zo’o” I think that would be pretty clear indicator of irony in the sense of humorously asserting something that’s false

Unrelated to thread topic but yeh lol

Bit idea: just saying “colon three” aloud :3

I have no idea what they’re talking about, maybe those Unicode language tag characters

This is just something I heard, but supposingly some AI models that are meant to be used in education, are designed to throw in special colourless/transparent characters here and there to prevent plagiarism or cheating, which can then be used as markers by checking apps to figure out if the student has plagiarized their answer using AI. I have no idea how true it is.

I caught wind of this on hacker news and the comments there were a tier above this. Saying that the bot is correct and humans are indeed scum etc. etc.

Incredibly messed up. Especially after what recently happened due to character ai

I don’t understand. What prompted the AI to give that answer?

It’s not uncommon for these things to glitch out. There are many reasons but I know of one. LLM output is by nature deterministic. To make them more interesting their designers instill some randomness in the output, a parameter they call temperature. Sometimes this randomness can cause it to go off the rails.

It’s not uncommon for these things to glitch out.

This is what they said before the installation of the Matrix

Additionally, Google’s generative AI stuff is exceptionally half baked. To such an extent that it seems impossible for a megacorporation of the calibre of Google. There has already been a ton of coverage on it. Like the case of LLM summary of a Google search suggesting you put inedible things on a pizza and their image generators producing multiracial Nazis.

Also isn’t this wrong? The question didn’t ask if SSI was solely for older adults, it could be interpreted as older adults being included but not being the only recipient. I would be pissed if I answered true and the teacher marked it wrong because AI told them to.

Edit: I get though that questions are formulated like that sometimes at the college-level and I always found it imprecise. It’s a quirk of language in the way English is structured, which means you do need extra words in there to make your question very clear because English doesn’t do this natively with syntax.

I guess the “correct” answer depends on the pattern of question-making.

I’m not saying this to excuse google (I generally avoid the big corp AI models), but I’ve used LLMs for like… what is it, almost 2 years now? And the degradation seems barely even existent, like it just went from 0 to 100. I only skimmed, so maybe I missed something important. It’s very weird. Typically there’s going to be somewhat of a path to output like this, and corp models such as this are usually tuned heavily to stay on a sanitized, assistant-like track.

The theory that weird tokens in input caused it to go wonky does seem plausible. LLMs use tokenizers (things that break stuff up into words or segments of words) and so weirdness relative to how they tokenize and what they’re trained on could maybe cause it to go off the rails.

Anyway, I tend to be opposed to the sanitized assistant format that they are most known for because it presents AI as a fact machine (which it cannot do reliably), it tries to pave over creativity of responses with sanitized tuning (which gives a false sense of security for “safe” output - as we see in examples like this, it cannot block everything weird in all scenarios), and it gets people thinking that AI = chat assistant. When the basics of an LLM without all the bells and whistles is more like: You type “I went to” and the AI continues it as “the store to buy some bread, where I saw”. How an LLM is likely to continue given text will depend some on how it’s tuned, what is in the training data, etc., but that’s ultimately what it’s doing, is it’s predicting the token that should come next and sampling methods add an element of randomness (and sometimes other fancy math) so that it doesn’t write deterministically. It doesn’t know that there is an independent human user and itself a machine. It is tuned to predict tokens like the format is a chat between two names and some stuff is done behind the scenes to stop its output before it continues writing for the user; if you remove those mechanisms with a model like this, you could have it write a whole simulated back and forth.

But because chat format presents it like AI and user, no matter how many times the corps shove in phrases like “As an AI language model”, it’s going to feel like you are talking with an entity. Which I don’t think is so much a problem for chat format where you go in knowing it’s for fantasy, like roleplay setups. But this corp stuff badly wants to encroach on the space inhabited by internet search and customer service, and it just can’t reliably. It’s a square peg in a round hole, or round peg in a square hole, however that goes.

It eventually all ties into the contradiction between what the technology is vs. what big tech and venture capital want you think it is as you alluded. I think LLMs in an ideal scenario could be at worst a fun toy and at best a good stepping stone but big tech has decided to get incredibly weird with it. So now you get bombastic claims about what LLMs will be able to do five years from now alongside disclaimers that it currently makes shit up so please double check the responses.

The reason I posted this is that it’s good to try and hold demoncorps like Google accountable even though it won’t likely make a dent. At worst it’s just good fun expect for the Gemini user in question.

The reason I posted this is that it’s good to try and hold demoncorps like Google accountable even though it won’t likely make a dent.

Agreed. I have no love for google or how they and others like them are going about this. Personally, it’s a subject I hang around a lot, so I tend to use what opportunities I have to drop some basics about it, in case there are people around who think it’s more… magical than it is, for lack of a better word.

So now you get bombastic claims about what LLMs will be able to do five years from now alongside disclaimers that it currently makes shit up so please double check the responses.

Lol yeah, that stuff is… something. AGI (Artificial General Intelligence) seems to be the go-to buzzword to fuel the hype machine, but as far as I can tell, the logistics of actually achieving it are so beyond what an LLM is, at least in the current transformer infrastructure of things. One of the things I’ve picked up along the way is just how important data is that goes into training an LLM. And it’s this thing that kinda makes intuitive sense when you think about it, but can get lost in the black box “AI so clever” hype; that it can’t know something it hasn’t ever been presented with before. To put it one way, if you trained an LLM on a story with binary good and a story with binary evil, it’s not necessarily going to extrapolate from that how to write a mundane story about shades of gray. It might instead combine the two flavors, creating a blend of the extremes. I can’t claim with confidence it’s exactly this straightforward in practice, but trying to get at a general idea.

Raw GPT 4o could honestly be incredible – both in good and bad. I remember the early chatGPT would cheerfully give you recipes for bombs and such if you asked it. Then they manually blocked that.

Now it has to do the “it’s important to consider both sides” thing all the time and I feel like you get much better responses if you talk to it at length like you would a person. Saying “thanks, now let’s look at” etc. In a study they found that if you told it to take a deep breath before answering it would send a slightly more accurate answer, apparently. I use it for bug-solving and coding because it relies on an existing corpus of documentation so it’s generally reliable and pretty good at that, but I’m starting to hate having to write at length to describe exactly what I want it to do. It should be able to infer my intent, I think this is something an LLM could do innately.

I did get some interesting answers if I primed it by saying “you are a marxist who has read the entirety of the Marxists Internet Archive”. Then exercise some human discretion when reading the output but it has allowed me to consider topics differently at times. Of course there’s also always the hallucinations machine phenomena where you second guess everything it tells you anyway because there’s no way to check if it’s actually true.

I’ve also tried much smaller LLM models and you can tell the difference. Actually, you can’t so much anymore, precisely because GPT is purposely throttled so much. I want a GPT model that only needs one sentence to do its job and will not presume it knows better than me! If there has to be AI, it has to be open source AI!

If there has to be AI, it has to be open source AI!

Not to sound like an ad, but this is where I appreciate NovelAI as a service. Even though it’s not open source and is a paid service, they have a good track record for letting adults use a model like an adult. They don’t have investors breathing down their necks and they made it encrypted from the start, so you can do whatever you want with text gen and not worry about it being read by some programmer who’s using it to train a model or whatever.

As you can imagine, this makes them behind the big corps who are taking ungodly amounts of investor funding, but their latest is pretty good. Not as “smart” as the best models have ever been and mainly storytelling focused, but pretty good.

So in other words, within the capitalist model of things and AI being so expensive to host and train, they’re one of the closest things I’ve seen to being in the same spirit as what open source AI could do for people without going quite that far.

Saying “thanks, now let’s look at” etc. In a study they found that if you told it to take a deep breath before answering it would send a slightly more accurate answer, apparently.

Reminds me of how with one model, it was like, saying “please” as part of a request would give slightly better results.

I use it for bug-solving and coding because it relies on an existing corpus of documentation so it’s generally reliable and pretty good at that, but I’m starting to hate having to write at length to describe exactly what I want it to do. It should be able to infer my intent, I think this is something an LLM could do innately.

I won’t ramble on too much on this topic, but I’m sure I could go on at length on this point alone. It’s a fascinating thing to me finding that sweet spot where an AI is designed like an extra limb for a person (metaphorically speaking, not talking about actual cybernetics). I think that’s where it’s most powerful, as opposed to implementations where we’re trusting that what it’s saying and doing is solid on its own. The means of interfacing where you tell the model in natural language what you want and it tries to give it to you is only one approach and there could probably be better. With storytelling focused AI, for example, you might use outlining and other such stuff to indirectly help the AI know what you want.

I did get some interesting answers if I primed it by saying “you are a marxist who has read the entirety of the Marxists Internet Archive”. Then exercise some human discretion when reading the output but it has allowed me to consider topics differently at times. Of course there’s also always the hallucinations machine phenomena where you second guess everything it tells you anyway because there’s no way to check if it’s actually true.

That’s interesting. I experimented with a NovelAI model of trying to set up its role as a sort of marxist therapist, to avoid more individualist-feeling back and forth. I’m not sure how much difference it actually made, but it was similar, I think, to what you describe in the way that it has helped me consider things in ways I hadn’t thought of at times. And yeah, the hallucination thing is a very real part of it. Occasionally there are times an LLM tells me something that I look up and it turns out it is real and I hadn’t heard of it, but then there are also those times where I’m just taking what it says with a grain of salt as something to consider rather than as something grounded.

A modest proposal:

but seriously tho, it shouldn’t be news that LLMs are a waste of time and resources.

but seriously tho, it shouldn’t be news that LLMs are a waste of time and resources.code geass euphie speech vibes

i mean, i’d be angry of such a lot of questions with such reiterative grammatical errors. that person has awakened AM, and soon we won’t have mouths…

A Reddit link was detected in your post. Here are links to the same location on alternative frontends that protect your privacy.

deleted by creator

deleted by creator